Robots and Ethics

Introduction

Robots can help humanity, and they have done so since the mid-20th century. While initially being mostly used for industrial and military applications, they are currently emerging in other areas, such as Contemporary robotics is increasingly based on artificial intelligence (AI) technology transportation, healthcare, education, and the home environment., with human-like abilities in sensing, language, interaction, problem solving, learning, and even creativity. The main feature of such ‘cognitive machines’ is that their decisions are unpredictable, and their actions depend on stochastic situations and on experience. The question of accountability of actions of cognitive robots is therefore crucial. The rapidly increasing presence of cognitive robots in society is becoming more challenging. They affect human behaviours and induce social and cultural changes, while also generating issues related to safety, privacy and human dignity[1].

Robots Ethics

“In 2017, the Rathenau Instituut issued a report on ‘Human rights in the robot age’, commissioned by the Parliamentary Assembly of the Council of Europe (PACE). The report starts from the premise that new technologies are “blurring the boundaries between human and machine, between online and offline activities, between the physical and the virtual world, between the natural and the artificial, and between reality and virtuality” (Rathenau Instituut, 2017, p.10). It continues by addressing potentially negative impacts of robotics and related technologies – especially the nano-, bio-, information and cognitive (NBIC) technologies convergence – on a number of issues related to human rights: respect for private life, human dignity, ownership, safety and liability, freedom of expression, prohibition of discrimination, access to justice and access to a fair trial. As for robotics and robots in particular, the report pays special attention to self-driving cars and care robots, recommending to the Council of Europe to reflect on and develop new and/or more refined legal tools for regulating the usage of such devices. It also recommends the introduction of two novel human rights: the right not to be measured, analysed or coached (related to possible misuses of AI, data gathering and the Internet of Things) and the right to meaningful human contact (related to possible misuses, intentional and unintentional, of care robots).”[2]

“The main reason why robotics remains legally underregulated probably comes down to the fact that robotics is a new and rapidly advancing field of research whose impact on the real world and legal consequences is difficult to conceptualize and anticipate.According to Asaro (2012), all harms potentially caused by robots and robotic technology are currently covered by civil laws on product liability, i.e. robots are treated in the same way as any other technological product (e.g. toys, cars or weapons). Harm that could ensue from the use of robots is dealt with in terms of product liability laws, e.g. ‘negligence’, ‘failure to warn’, ‘failure to take proper care’, etc. However, this application of product liability laws on the robot industry is likely to become inadequate as commercially available robots become more sophisticated and autonomous, blurring the demarcation line between responsibilities of robot manufacturers and responsibilities of robot users (Asaro, 2012)”[3]

Recommendations of Comest on Robotic ethics

Robotics and gender

Women are called to involve themselves heavily in robot ethics for ensuring that the human being remains the center of the earth and not machines. In addition, according to Comest, VI.3.11. Recommendation on Gender Equality. Particular attention should be paid to gender issues and stereotyping with reference to all types of robots described in this report, and in particular, toy robots, sex companions, and job replacements.[4]

Robotics and environment

VI.3.12. Recommendations on Environmental Impact Assessment. Similar to other advanced technologies, environmental impact should be considered as part of a lifecycle analysis, to enable a more holistic assessment of whether a specific use of robotics will provide more good than harm for society. This should address the possible negative impacts of production, use and waste (e.g., rare earth mining, e-waste, energy consumption), as well as potential environmental benefits. While constructing robots (nano, micro or macro), efforts should be made to use degradable materials and environmentally friendly technology, and to improve the recycling of materials[5]

Recommendations on the internet of things

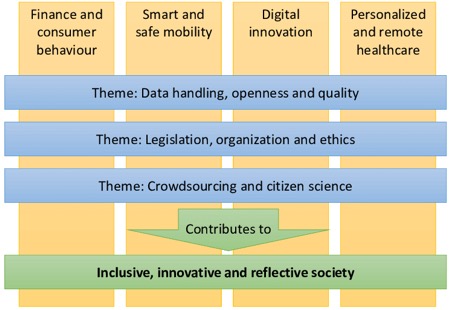

- The Internet of Things (IoT) is a rapidly emerging technology where smart everyday physical devices, including home appliances, are interconnected. This enables using devices as sensors, and collecting (big) data that can be used for many purposes.

- These networks of everyone and everything create entirely new options in so many areas such as augmented reality, tactile inter-networking, distributed manufacturing, and smart cities.

- While IoT immediately raises ethical questions related to privacy, safety, etc., the next generation of IoT, sometimes referred to as IoT++, is even more challenging. In IoT++, artificial intelligence (AI)is used to process the collected data. The resulting cognitive algorithms, acting as independent learners, may lead to unpredictable results.

- Similarly, emerging technologies create small-size robots which can serve as mobile sensors, collecting information in targeted locations – enlarging the scope of IoT even beyond existing ‘things’.

- Identifying that the ethical challenges of IoT as similar, yet not identical, to that of cognitive robotics, the Commission recommends extending its work in this area to study IoT ethics and provide appropriate recommendations.[6]

[1] Report on Comest on robotic ethics, page 2

0 Comments